Reinforcement Learning from Human Feedback (RLHF) is at the forefront of cutting-edge advancements in intelligence research marking an era of machine learning that revolves around human input and adaptable decision making. As RLHF continues to evolve it brings forth a range of opportunities and challenges that shape the trajectory of AI research and the development of systems. In this article we explore the prospects of RLHF, including its advancements, implications and the obstacles that lie ahead in the field of AI research.

Advancements in Machine Learning with a Focus on Humans

RLHF sets the stage for advancements in machine learning with a strong emphasis on understanding human feedback, preferences and values in real time. The future developments in RLHF hold promise for creating AI models that possess a comprehension of human intentions, nuanced responses and empathetic interactions. Ultimately this will lead to human behaviours and context awareness within AI systems.

Personalized User Adaptability

The future outlook for RLHF entails ground breaking transformations when it comes to user experiences and adaptability, within AI systems. Through the assimilation of feedback AI models have the capability to personalize their responses, recommendations and interactions according to individual user preferences. This fosters adaptive customized user experiences, across applications and domains.

Ethical Considerations and Responsible AI Deployment

When it comes to considerations and responsible deployment of AI systems the incorporation of Reinforcement Learning from Human Feedback (RLHF) raises challenges. The future of RLHF entails the creation of AI systems that prioritize transparency, fairness and accountability. These systems should align with values. Successfully navigate complex ethical dilemmas and decision making, in real world scenarios characterized by diversity.

Mitigating Bias and Ensuring Fairness

As the field of Reinforcement Learning, from Human Feedback (RLHF) continues to progress, addressing bias and promoting fairness in AI systems becomes increasingly important. The future developments in RLHF seek to tackle biases, discrimination and fairness concerns by incorporating feedback and real-world data. This ensures that AI models make decisions that’re fair, inclusive and equitable.

Collaborative Human AI Partnerships

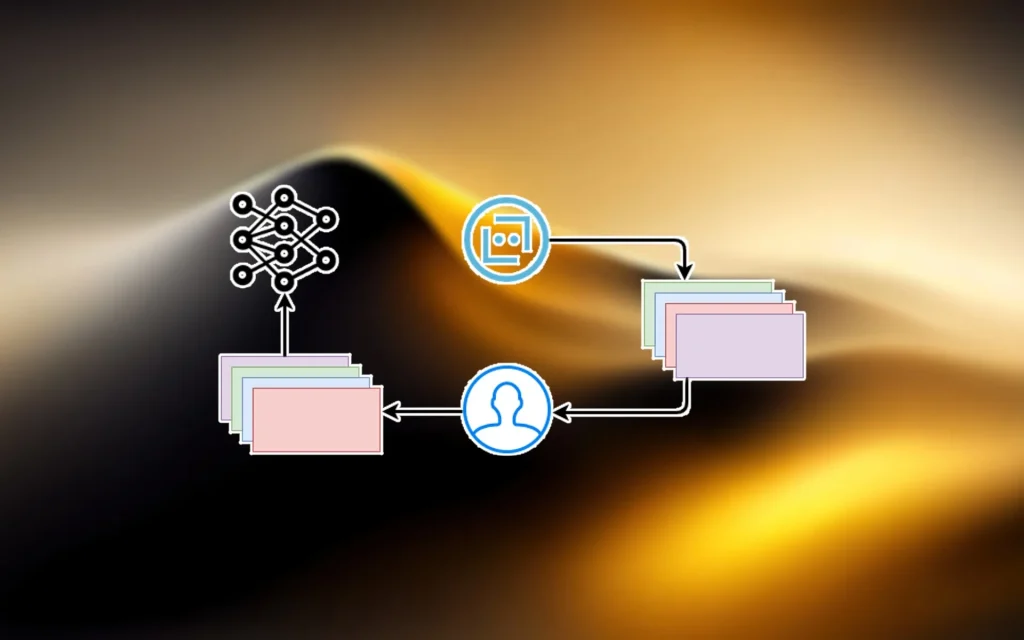

The future of RLHF visualizes a partnership between humans and AI systems. In this vision AI systems act as empathetic collaborators learning from and working alongside users. This collaborative approach opens up opportunities to enhance user experiences promote decision making processes and create solutions that align with human values and preferences.

Conclusion

The future of Reinforcement Learning from Human Feedback (RLHF) represents a journey in the field of AI research. It promises advancements in machine learning, personalized user experiences, ethical considerations, bias mitigation techniques and collaborative partnerships between humans and AI. While the future of RLHF offers opportunities, for progress it also poses challenges related to deployment of AI technology as well as ensuring fairness and ethical decision making.

RLHF continuous evolution is opening up possibilities for the advancement of AI systems that focus on values adjust to user preferences and promote cooperative, ethical and transparent interactions. This will ultimately shape the future of AI systems that prioritize humans.